Introduction

In our last blog about PCA, we introduce the data compression problem and derive the PCA algorithm by hand. However, we don’t know what does PCA actually do to the raw data and what information is stored after compression. In this blog, we are trying to unveil the secrets behind the algorithm.

Maximize the Variance

When we apply PCA algorithm on a data matrix , we actually project each data point onto another space , where . The space, called the principal subspace, is where the variance of the projected data points (also called the principal components) is maximized. In other words, when the original data points are transformed into the principal subspace, they are most “spread out”. For example, suppose that there is a collection of four data points , we can obtain the raw data matrix:

Next, we compute and find its eigenpairs:

Now we want to compress the matrix . Here, we can only set and transform each data point into space. Therefore, we multiply by the principal eigenvector and obtain:

Note that each element of the principal component is the linear combination of all the elements of the original data point. Hence, the principal components retain most of information of original data points, while discarding some unimportant information such as noise. For original data points in , the variances of the two dimensions are:

For projected data point in the principal subspace, the variance is:

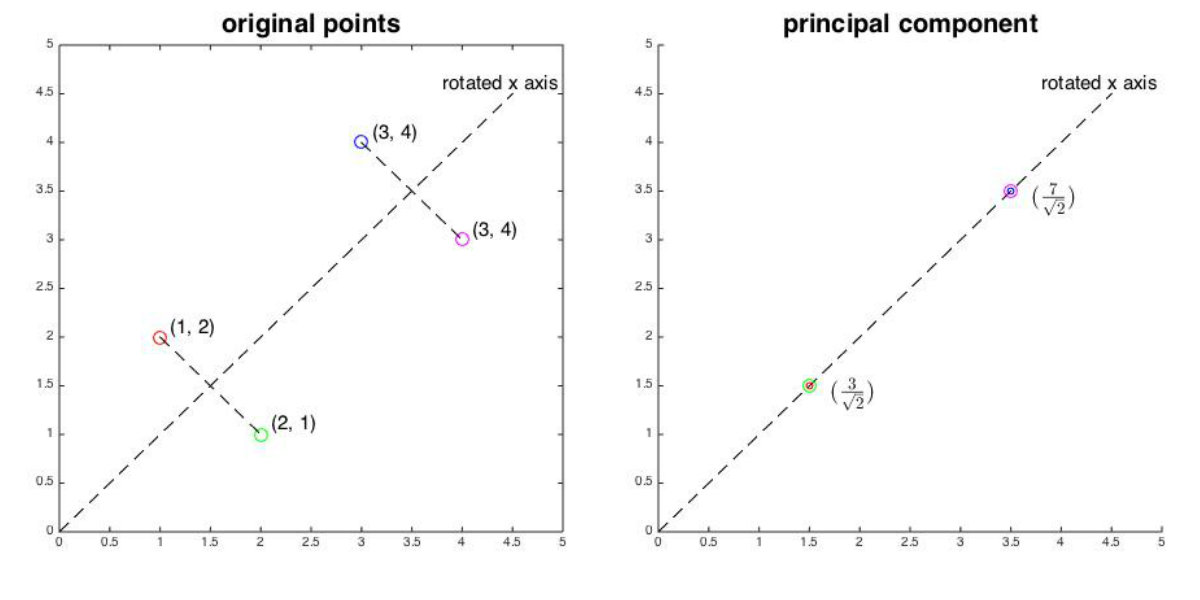

We can find that the variance of the first dimension of the principal component is larger than any dimension of the original points, which demonstrates that PCA represents each data point on an axis, along which the variance of the data is maximized. As the following shows, the eigen matrix can be viewed as a rotation 45 degrees counterclockwise of the axes. When we project each data point onto the rotated axis, we can see that they are most “spread out”.

In PCA, we project original data points onto axes corresponding to largest eigenvalues. Such axes store the raw data with maximized variances and thus retain most of information about original data. Therefore, we can concentrate on analyzing these principal components without worrying about the loss of unimportant information.

Using Covariance Matrix to Develop PCA

Knowing that PCA helps to maximize the variance of data points gives us another way to derive the algorithm, that is, computing the eigenpairs of the covariance matrix of the raw data. Suppose that there is a data matrix containing data points . We want to compress the raw data into space. For simplicity, we set , which means the principal component of each data point after transformation is a scalar value. Since the transformed scalar is the linear combination of all the elements of the original data point, there must be a vector to complete the transformation:

Without loss of generality, should be a unit vector such that . And we finally compress the data matrix into . And the unbiased variance of all points after projection is:

Substituting and we obtain:

where is the data covariance matrix:

where denotes the variance of the -th dimension of the original data points, denotes the covariance between -th and -th dimensions and . Since PCA maximizes the variance of original data points, we now maximize the projected variance:

The Rayleigh–Ritz theorem tells us the solution to the optimization problem above is the principal eigenvector (the eigenvector corresponds to the largest eigenvalue) of the covariance matrix .

Finally, we can eigendecompose the covariance matrix of the raw data matrix and find the principal eigenvectors to compress the original data. It works similarily to the eigendecomposition of matrix but not exactly the same, since the principal eigenvectors of and may not be the same. Considering our previous example:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

import numpy as np

M = np.array([[1,2], [2,1], [3,4], [4,3]])

Cov = np.cov(M.T) # Compute the covariance matrix of M

lams_1, vecs_1 = np.linalg.eig(np.dot(M.T, M)) # Eigendecompose M'M

lams_2, vecs_2 = np.linalg.eig(Cov) # Eigendecompose the covariance matrix

print("original data matrix:")

print(M)

print("eigenvalues of M'M:", lams_1)

print("eigenvalues of Cov:", lams_2)

# get the unique eigenvectors

for i in range(vecs_1.shape[1]):

if vecs_1[0, i] < 0: vecs_1[:, i] = vecs_1[:, i] * -1

for i in range(vecs_2.shape[1]):

if vecs_2[0, i] < 0: vecs_2[:, i] = vecs_2[:, i] * -1

print("eigenvectors of M'M: ")

print(vecs_1)

print("eigenvectors of Cov: ")

print(vecs_2)

print("The compressed M by eigendecomposing M'M:", np.dot(M, vecs_1[:, 0]))

print("The compressed M by eigendecomposing Cov:", np.dot(M, vecs_2[:, 0]))

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

original data matrix:

[[1 2]

[2 1]

[3 4]

[4 3]]

eigenvalues of M'M: [58. 2.]

eigenvalues of Cov: [2.66666667 0.66666667]

eigenvectors of M'M:

[[ 0.70710678 0.70710678]

[ 0.70710678 -0.70710678]]

eigenvectors of Cov:

[[ 0.70710678 0.70710678]

[ 0.70710678 -0.70710678]]

The compressed M by eigendecomposing M'M: [2.12132034 2.12132034 4.94974747 4.94974747]

The compressed M by eigendecomposing Cov: [2.12132034 2.12132034 4.94974747 4.94974747]

Here we got the same eigenvectors but different eigenvalues by eigendecomposing and , respectively. Therefore, the two methods obtain the same compressed data. Under situations where the two methods get different eigenvectors, we might obtain different compressed data:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

M = np.random.rand(4,2) * 4

Cov = np.cov(M.T) # Compute the covariance matrix of M

lams_1, vecs_1 = np.linalg.eig(np.dot(M.T, M)) # Eigendecompose M'M

lams_2, vecs_2 = np.linalg.eig(Cov) # Eigendecompose the covariance matrix

print("original data matrix:")

print(M)

print("eigenvalues of M'M:", lams_1)

print("eigenvalues of Cov:", lams_2)

# get the unique eigenvectors

for i in range(vecs_1.shape[1]):

if vecs_1[0, i] < 0: vecs_1[:, i] = vecs_1[:, i] * -1

for i in range(vecs_2.shape[1]):

if vecs_2[0, i] < 0: vecs_2[:, i] = vecs_2[:, i] * -1

print("eigenvectors of M'M: ")

print(vecs_1)

print("eigenvectors of Cov: ")

print(vecs_2)

print("The compressed M by eigendecomposing M'M:", np.dot(M, vecs_1[:, 0]))

print("The compressed M by eigendecomposing Cov:", np.dot(M, vecs_2[:, 0]))

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

original data matrix:

[[2.84662895 0.72347183]

[1.2427886 1.44384041]

[0.9416138 3.39384996]

[3.2100749 1.21335165]]

eigenvalues of M'M: [29.47256331 6.96500039]

eigenvalues of Cov: [0.32558503 2.33611369]

eigenvectors of M'M:

[[ 0.78512207 0.61934105]

[ 0.61934105 -0.78512207]]

eigenvectors of Cov:

[[ 0.72246974 0.69140254]

[ 0.69140254 -0.72246974]]

The compressed M by eigendecomposing M'M: [2.68302702 1.8699704 2.84123236 3.27177914]

The compressed M by eigendecomposing Cov: [2.55681353 1.89615209 3.02680397 3.15809639]

Usually, we prefer to directly use the covariance matrix to develop the PCA algorithm since it ensures that the variances of original data points are maximized.

Using Correlation Matrix to Develop PCA

Sometimes the variances of the dimensions in our data are significantly different. In this case, we need to scale the data to unit variance. In other hands, let and , we need to standardize the raw data points:

And then we obtain the standard matrix . Next, we compute the correlation matrix of the original matrix :

Finally, we use principal eigenvectors of the correlation matrix to develop the PCA.

The compressed data obtained by eigendecomposing covariance and correlation matrices is usually different. This is mainly because the process of standardizing raw data to gain correlation matrix is actually reducing the differences between the variances of different dimensions in original data. Which matrix to choose depends on the demands of the environmental settings.

Reduce Computational Complexity

Similar to the eigendecomposition of , when the situation comes, we can first compute the eigenpairs of or and then obtain the eigenvectors of covariance or correlation matrix by multiplication or , where is the eigenvector of matrix or . This operation can effectively reduce the computation complexity. See our last blog for details.

More Complete PCA Algorithm

- Given a set of data sample , create the raw data matrix or the standard matrix .

- Compute all eigenpairs of the symmetric matrix or the corvariance matrix or the corelation matrix . When the number of columns are much larger than the number of rows, we compute the eigenpairs of or or and use them to find the largest eigenpairs we need.

- Use eigenvectors corresponding to largest eigenvalues as columns to form the eigenmatrix .

- Apply matrix multiplication to compress the raw data.

- Use to approximately reconstruct the raw data when necessary.

Conclusion

In this blog, we open the black box of the PCA and develop a more complete algorithm. We hope this blog can help understand the PCA algorithm more deeply.

Reference

- Pattern Recognition and Machine Learning

- Mining of Massive Datasets

- Data, Covariance, and Correlation Matrix