Introduction

Quadratic optimization problems appear frequently in the fields of engineering and computer science. Lots of problems in the real world can be expressed as the minimization or maxmization of some energy/cost function, with or without constraints. And the simplest kind of such a function is a quadratic function. This blog introduces the matrix forms of pure quadratic equations and two common quadratic optimization problems.

Quadratic Equation and Symmetric Matrix

A pure quadratic equation, that is, an equation with only quadratic terms, can be expressed as:

where are variables, are constant coefficients and is a constant. Denote the vector and the symmetric matrix . Then we can restate the quadratic equation above as the matrix multiplication:

Similarly, pure quadratic expressions with multiple variables can also be represented as corresponding matrix forms:

Quadratic Function and Eigenvectors

Usually, we are interested in estimating the value of a quadratic function with or without constraints:

In this case, the eigenvectors of the symmetric matrix tells us the information about the gradient direction of the function . For example, suppose that and , we first compute the eigenvectors and eigenvalues of the matrix (Methods for computing eigenpairs are discussed in this blog):

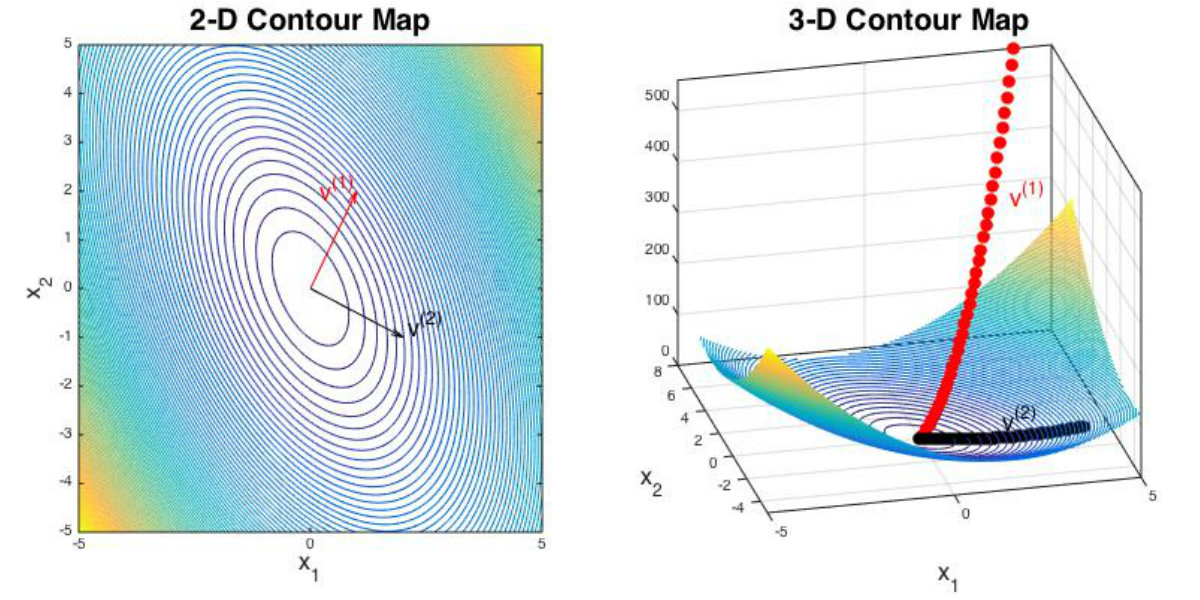

Next, we draw the contour map of the function and mark the directions of the two eigenvectors:

We can see that the direction of the eigenvector with largest eigenvalue () indicates the steepest gradient direction of while the eigenvector with smallest eigenvalue () indicates the most gradual gradient direction. In other words, the quadratic function changes fastest along the direction of while changes slowest along the direction of .

Quadratic Optimization Problems

In this section, we introduce two common quadratic optimization problems and their corresponding theories.

Rayleigh–Ritz Ratio

For the following quadratic problems:

where and , we can find the solution according to the Rayleigh–Ritz theorem:

The ratio is known as the Rayleigh–Ritz ratio. When , we have , and we can normalize each into a unit eigenvector and obtain the optimized solution:

This conclusion is essential for the Principle Component Analysis (PCA) algorithm, as we will see in my later blogs. In addition, we can derive a corollary from the Rayleigh–Ritz theorem:

where denotes the orthogonal complement.

Positive Definite Quadratic Function

Another common quadratic optimization problem is:

where and . If is a positive definite symmetric matrix, we can obtain the optimized solution through the following proposition:

In order to prove the proposition above, the first thing we need to know is that if is symmetric, which can be easily proved by Rayleigh–Ritz theorem. Then we can prove the proposition above:

Note that since is symmetric. Hence, the optimized solution of is the solution of .

Conclusion

In this blog, we introduce the concept of quadratic optimization problems, the relationship between quadratic function and symmetric matrix and two common quadratic optimization problems with their solutions.

Reference

- Deep Learning

- Principles of Mathematics in Operations Research

- CIS 515 Fundamentals of Linear Algebra and Optimization: Chapter 12